British police forces are planning to trial Artificial Intelligence schemes to predict and target potential criminals before an offence has been committed.

Sir Andy Marsh, the head of the College of Policing, which sets and maintains national standards, said that there are currently around 100 projects in which Artificial Intelligence is being trialled by police forces across the country, including to reduce manpower hours spent on menial tasks such as paperwork.

However, the initiative also includes “predictive analytics” schemes to target supposedly future criminals before they commit a crime, in what has been likened to the 1956 sci-fi classic novel The Minority Report by Philip K. Dick — later adapted to film in 2002 by Stephen Spielberg– in which a group of mutants with predictive powers are tasked with aiding the police to carry out “pre-crime” arrests against people who will supposedly commit a crime in the future.

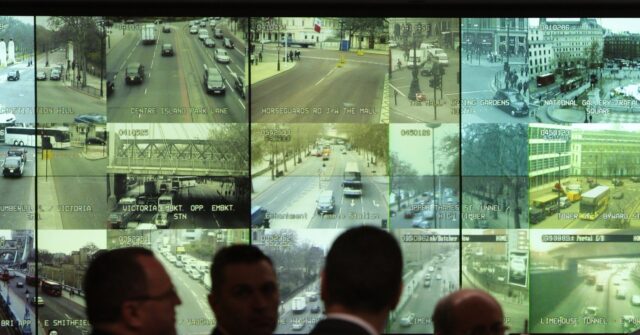

According to a report from The Telegraph, the UK government has committed £4 million ($5.4m) to create an “interactive AI-driven map” of Britain that hopes to be able to point out crimes before they happen by 2030. Another are of use would be to spot the early indications of fights or other anti-social behaviour to allow police to prevent it from escalating or to deploy police in areas likely to see a knife crime offence.

Marsh said that such predictive policing could also be applied to identify the men who pose the greatest threat to women and girls, saying: “We know the data and case histories tell us that, unfortunately, it’s far from uncommon for these individuals to move from one female victim to another, and we understand all of the difficulties of bringing successful cases to bear in court.”

Home Secretary Shabana Mahmood, who has been a leading force behind the nationwide expansion of live police facial recognition cameras, reportedly said she wanted to transform surveillance in Britain with the help of AI so that “the eyes of the state can be on you at all times.”

Incredibly, it is stated Mahmood even favourably compared her idea to the Panopticon, a Georgian super-prison concept used by Foucault to “illustrate the proclivity of disciplinary societies subjugate its citizens”.

Speaking to the Tony Blair Institute and Tony Blair himself, she is reported to have remarked: “When I was in justice, my ultimate vision for that part of the criminal justice system was to achieve, by means of AI and technology, what Jeremy Bentham tried to do with his Panopticon. That is that the eyes of the state can be on you at all times… I think there’s big space here for being able to harness the power of AI and tech to get ahead of the criminals, frankly, which is what we’re trying to do.”

The announcement of AI law enforcement schemes comes in the wake of a major policing scandal involving failures of Artificial Intelligence.

Just last week Craig Guildford of West Midlands Police was forced to resign from his position as chief constable after it was revealed that the decision to ban Israeli fans from a football match between Maccabi Tel Aviv and West Ham in Brimingham was partially based off of false claims derived from Microsoft’s Copilot AI chatbot.

The police force had initially attempted to claim that its erroneous justifications for banning the supposedly violence-prone Maccabi supporters were based on false information gathered from Google searches. However, it later admitted that officers had relied on the Microsoft chatbot, which in turn had “hallucinated” a violent football match that never took place in Britain involving the Israeli club.

Plans to expand, rather than reduce, police reliance on AI have drawn pushback from lawmakers. Conservative MP David Davis lamented that the Minority Report film was billed as a “dystopian sci-fi” flick, yet police leadership are openly embracing the dystopian aspects of the film.

“If an AI system deems you to be at risk of committing a crime, how do you go about proving the AI is wrong, and you pose no threat? The impact on your life when falsely accused of something is enormous,” Davis said.

“The use of these sorts of predictive policing algorithms creates a postcode lottery of justice, reinforcing existing biases and inequalities. Infusing those systems with AI will only exacerbate the injustice,” he warned.

“If the Government really wishes to start tackling the 94 per cent of crimes currently going unsolved, then it should focus on neighbourhood policing, stop wasting resources on things like ‘non-crime hate incidents’, which can amount to censorship, and tackle low-level, high-impact offending like burglary and phone theft.”

Follow Kurt Zindulka on X: Follow @KurtZindulka or e-mail to: kzindulka@breitbart.com

Read the full article here